Have I ever mentioned that when it rains, water comes into our basement?

Have I ever mentioned that said water flows less than an inch directly below the only place in the house my servers can go?

Ugh.

Month: October 2005

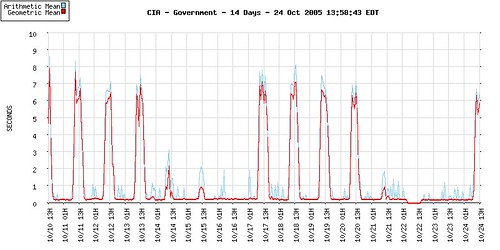

GrabPERF: CIA Web Site Run By Very Tired Hamsters

I have said it before, and I say it again: the performance profile of the CIA Web Site SUCKS.

How can you stand this if you are a major government agency? Guess no one at the CIA cares.

GrabPERF: Comparing Technorati Blog and Tag Search

Normally when I discuss the performance of a page I am measuring using GrabPERF, it’s either good news (“you just got 5 times faster!”) or bad news (“your page hasn’t loaded in 6 months; you still there?”).

Today, something a little different: a question. What’s the question?

Why is the performance of a Technorati Blog (aka Traditional) Search so different from a Technorati Tag Search?

For those of you who have been around for a while, you know that Technorati allows you to search for results based on a Traditional search engine methodology, which is date-ranked, most recent first. It also provides a way to search through the user-defined tags that are appended to posts, or listed as category titles.

The issue that I have been seeing from my measurements is that Tag Searching is performance substantially worse than Traditional Search.

What I need to understand from the Technorati team is the particular technical challenges that differentiate Traditional v. Tag Searching, because the difference in performance is astonishing.

And then there is the success rate of the Tag Search.

When I examine the data, almost all of the errors on the Tag Search measurement are Operation Timeouts. I have set the GrabPERF Agent to time out when no response has come back for the server in 60 seconds. So, effectively 15% of the Tag Searches do not return data to the client in 60 seconds.

So, while the Traditional Search has been tuned and optimized, there appears to be much work left to make the Tag Search an effective and useful tool.

Technorati: technorati, search, tagging, seo

IceRocket: technorati, search, tagging, seo

Never Work Alone: Integrating your IT Team…or vice versa

The gang at the Never Work Alone blog have a fantastic post describing some of the solutions to the Introverted IT / Extroverted Sales-Marketing integration issue.[here]

The best points:

- When hiring, place a premium on being able to explain technical issues to users and determine whether they’ve mastered the material. Expect this to cost more.

- Offer raises for taking training in oral technical communication

- Offer “days off” learning the essential business function of the department. You don’t understand what they do, they often don’t really GET what you do either, nor why its important – gieve them a chance to understand each other

- Train non-IT staff to repeat back in their own words what the IT person explained to them and confirm that they got it right (a good idea for any complex communication)

My eternal salvation comes from falling into the first category listed above. I can tear apart a packet trace and spot issues at the TCP layer, and then turn around and explain this issue to the VP of Marketing in terms that she can understand, and are relevant to her.

That is not dumbing it down, as many IT people feel. This strategy (or survival mechanism) allows a technical person to appeal to a wider audience. Being recognized across your organization, not just in your team, leads to greater rewards in the long run.

Search Engine Referral Statistics

Jeremy Zawodny asked the community for stats on where their search engine referrals were coming from.[here]

Google is crushing all other engines. However, I have noticed that my traffic has dropped substantially since the new Page Rank indexing began this week. Wonder what’s up there…

Ok, this falls under freaky spam

This was in my mailbox just now. Huh?!?!?

NEW BBC SERIES

Thinking about having a baby?

We are looking for couples to take part in a new BBC series exploring the science behind getting pregnant and pregnancy. If you are thinking about trying for a baby or have already started trying and would like to find out more, please call us on 0141 204 6620 or e-mail: baby@mentorn.tv

PLEASE DO NOT REPLY TO THIS EMAIL ADDRESS – REPLY TO mailto:BABY@MENTORN.TV

We’d like to advise you that we got your email address from a mailing list company. Their lists are compiled from those who have agreed, when visiting relevant websites, to receive contact from third parties

If you would like to be removed from our list please reply to mailto:BABYLIST@mentorn.tv

I am trying to figure out what list I said yes to to get this email.

GrabPERF: Yahoo BlogSearch Tuning

The Yahoo! BlogSearch started off showing less than remarkable results in terms of performance (I leave the qalitative judgement to other critics). Over the last 11 days, the team at Yahoo! have realized that there may be an issue, and they have been working on it.

On Thursday afternoon (Oct 20, 2005), they obviously implemented a major change that caused performance to improve dramatically.

This improvement was due to some back-end changes in the search itself. How do I know this? All the improvement came in first-byte (server response time).

HOUR AVG_SERVER_RESPONSE ------------------- ------------------- 10/20/2005 00:00:00 1.2159322 10/20/2005 01:00:00 1.2658667 10/20/2005 02:00:00 1.3596000 10/20/2005 03:00:00 1.1870328 10/20/2005 04:00:00 1.1672373 10/20/2005 05:00:00 1.2970500 10/20/2005 06:00:00 1.2220333 10/20/2005 07:00:00 1.3705500 10/20/2005 08:00:00 1.4188667 10/20/2005 09:00:00 1.4439000 10/20/2005 10:00:00 1.5772000 10/20/2005 11:00:00 1.4943559 10/20/2005 12:00:00 1.4794426 10/20/2005 13:00:00 1.4017333 10/20/2005 14:00:00 1.6012500 10/20/2005 15:00:00 1.4380333 10/20/2005 16:00:00 1.1326441 10/20/2005 17:00:00 0.5613000 10/20/2005 18:00:00 0.5656833 10/20/2005 19:00:00 0.5766833 10/20/2005 20:00:00 0.5219831 10/20/2005 21:00:00 0.4722131 10/20/2005 22:00:00 0.5022333 10/20/2005 23:00:00 0.4569138

Would love to hear from the Yahoo team, and learn exactly what they did to bring about such a massive improvement.

Is Web 2.0 Suffocating the Internet?

At my job, I get involved in trying to solve a lot of hairball problems that seem obscure and bizarre. It’s the nature of what I do.

Over the last 3 weeks, some issues that we have been investigating as independent performance-related trends merged into a single meta-issue. I can’t go into the details right now, but what is clear to me (and some of the folks I work with are slowly starting to ascribe to this view) is that the background noise of Web 2.0 services and traffic have started to drown out, and, in some cases, overwhelm the traditional Internet traffic.

Most of the time, you can discount my hare-brained theories. But this one is backed by some really unusual trends that we found yesterday in the publicly available statistics from the Public Exchange points.

I am no network expert, but I am noticing a VERY large upward trend in the volume of traffic going into and out of these locations around the world. And these are simply the public peering exchanges; it would be interesting to see what the traffic statistics at some of the Tier 1 and Tier 2 private peering locations, and at some of the larger co-location facilities looks like.

Now to my theory.

The background noise generated by the explosion of Web 2.0 (i.e. “Always Online”) applications (RSS aggregators, Update pings, email checkers, weather updates, Adsense stats, etc., etc.) are starting to really cause a significant impact on the overall performance of the Internet as a whole.

Some of the coal-mine canaries, organizations that have extreme sensitivity to changes in overall Internet performance, are starting to notice this. Are there other anecdotal/quantitative results that people can point to? Have people trended their performance/traffic data over the last 1 to 2 years?

I may be blowing smoke, but I think that we may be quietly approaching an inflection point in the Internet’s capacity, one that sheer bandwidth itself cannot overcome. In many respects, this is a result of the commercial aspects of the Internet being attached to a notoriously inefficient application-level protocol, built on top of a best-effort delivery mechanism.

The problems with HTTP are coming back to haunt us, especially in the area of optimization. About two years ago, I attended a dinner run by an analyst firm where this subject was discussed. I wasn’t as sensitive to strategic topics as I am now, but I can see now that the topics being raised have now come to pass.

How are we going to deal with this? We can start with the easy stuff.

- Persistent Connections

- HTTP Compression

- Explicit Caching

- Minimize Bytes

The hard stuff comes after: how to we fix the underlying network? What application is going to relace HTTP?

Comments? Questions?